Get the most from AI while staying safe

Evaluate models using state-of-the-art tools by leading experts. Collaborate on results.

Evaluate models using state-of-the-art tools by leading experts. Collaborate on results.

Gage helps you answer the hard questions.

Apply benchmarks and custom evaluation code to your models.

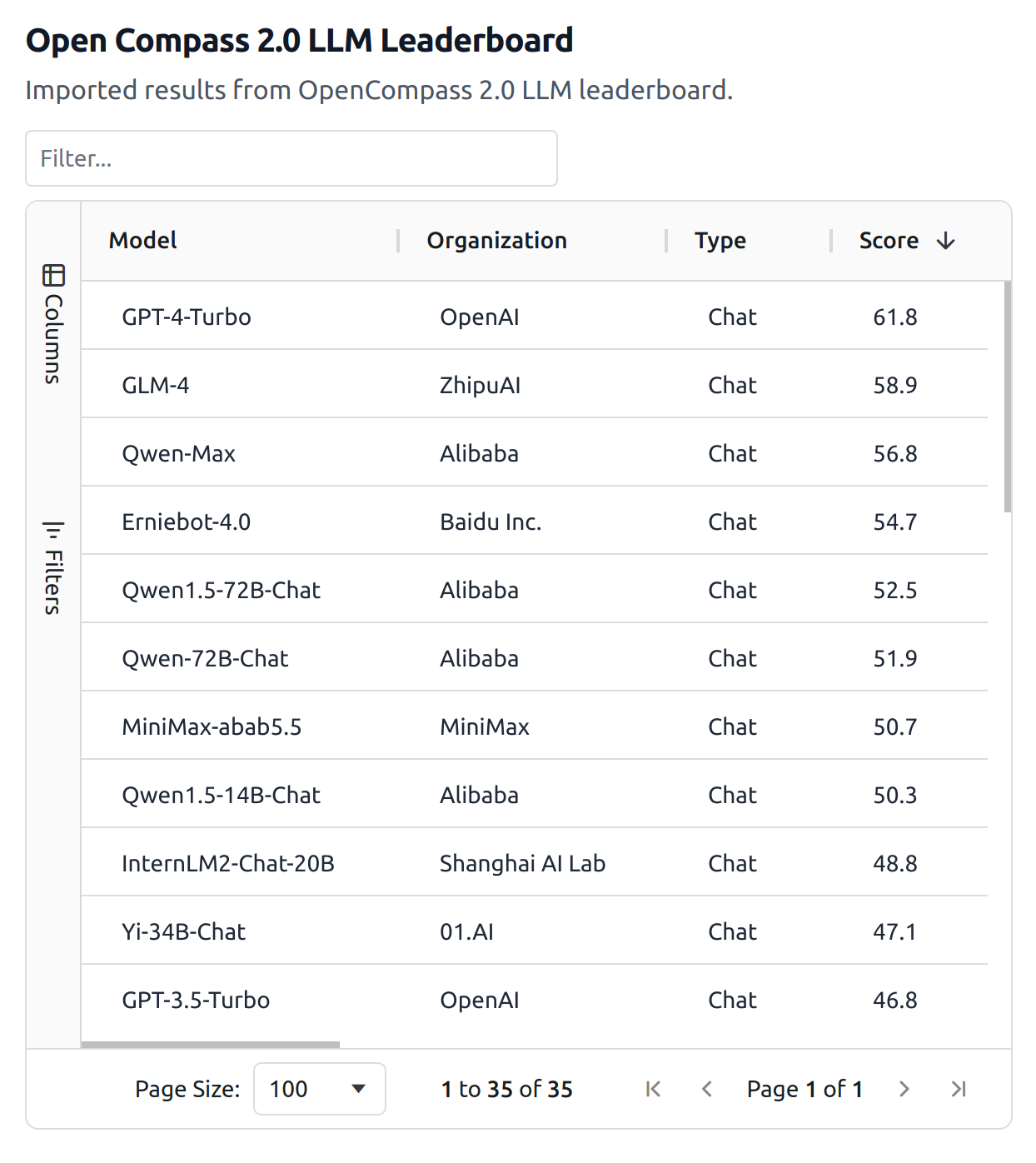

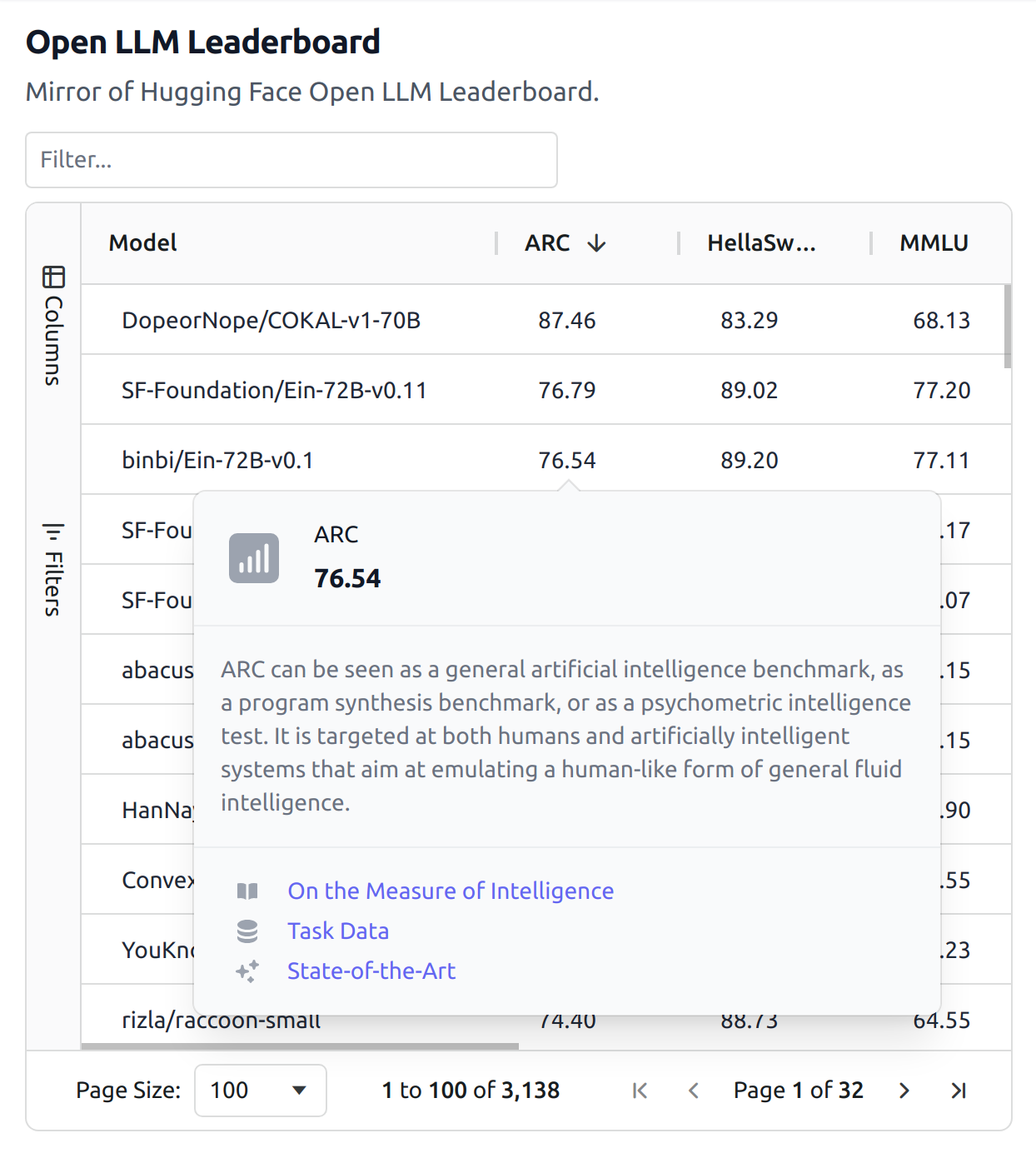

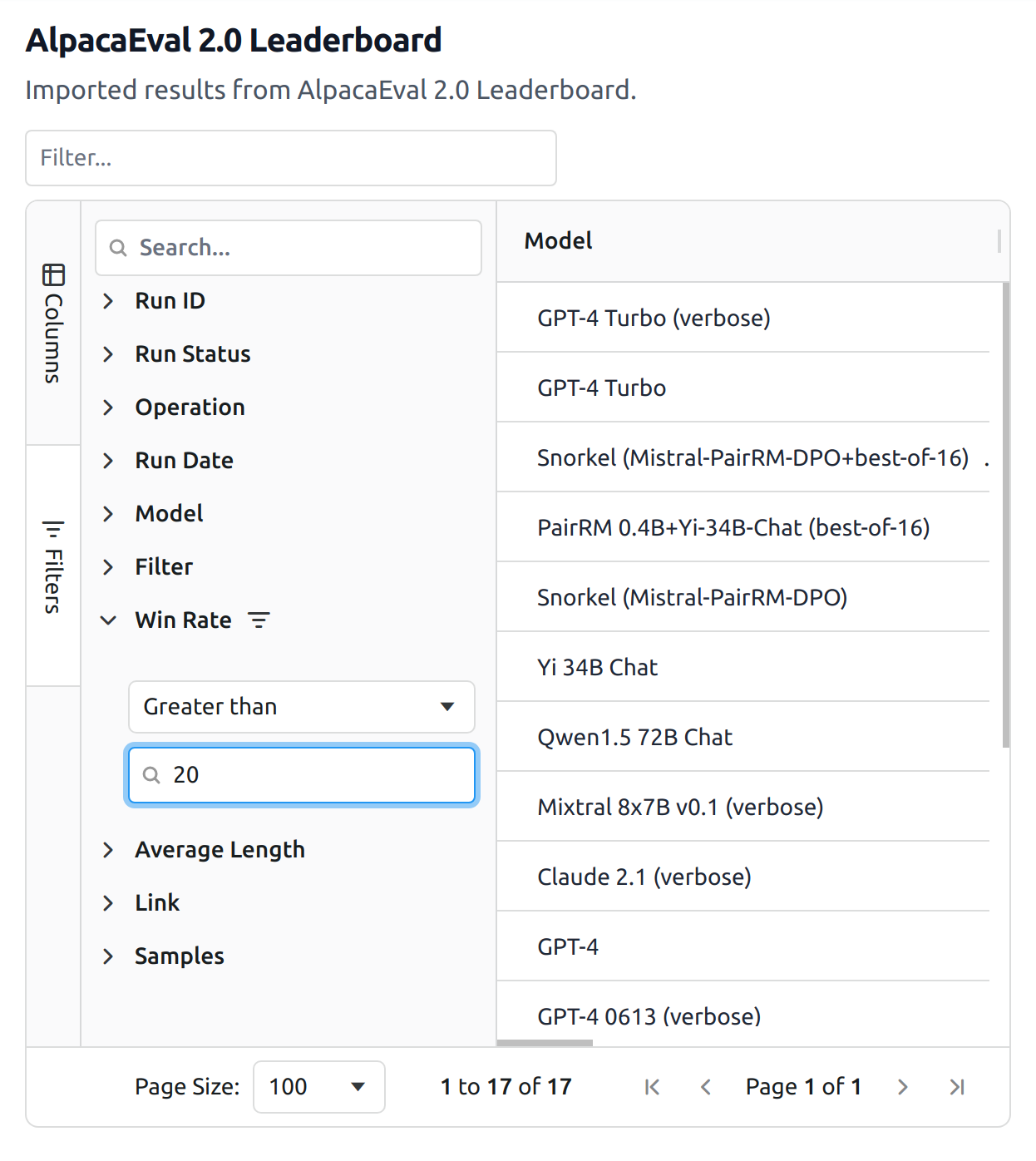

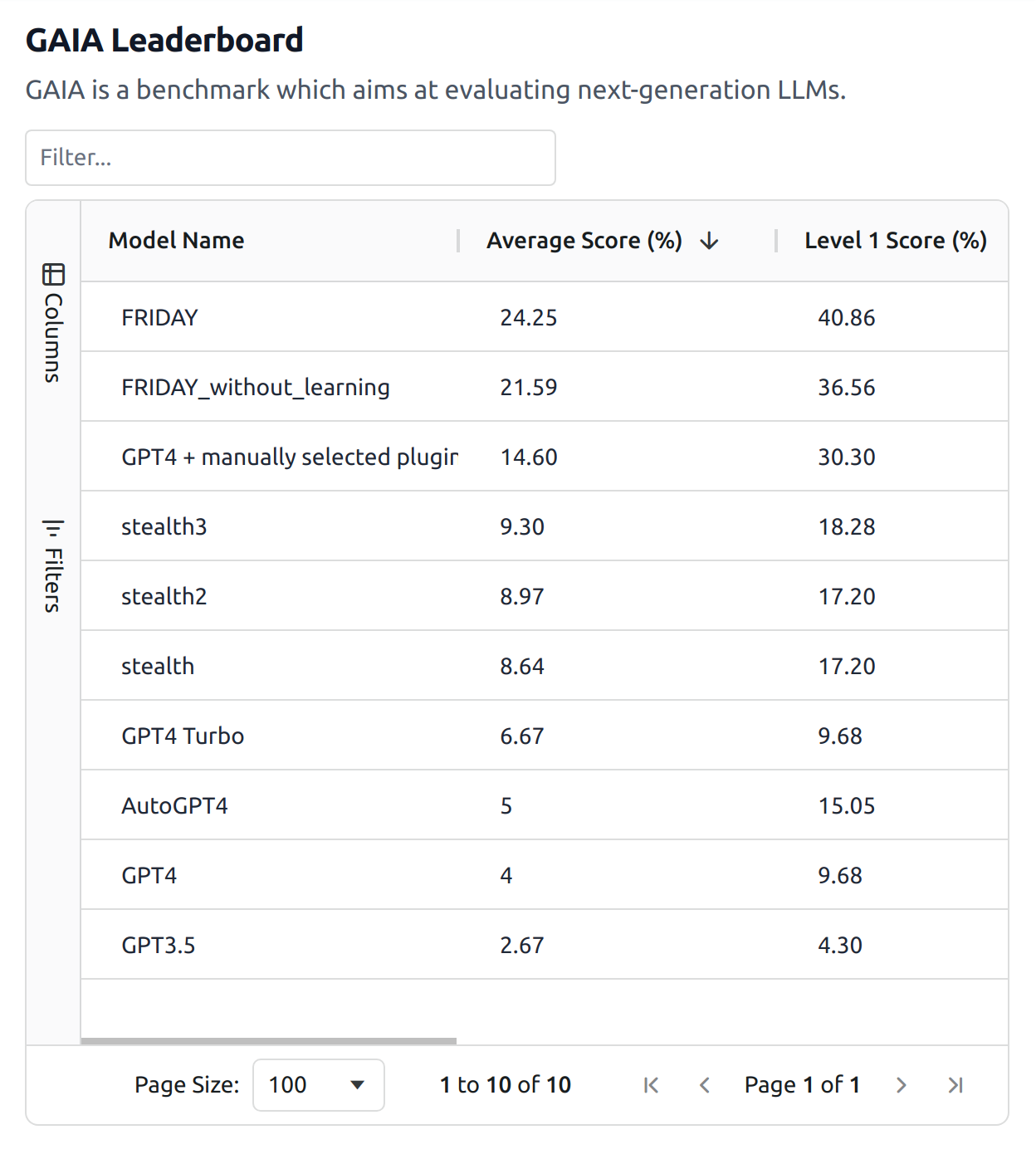

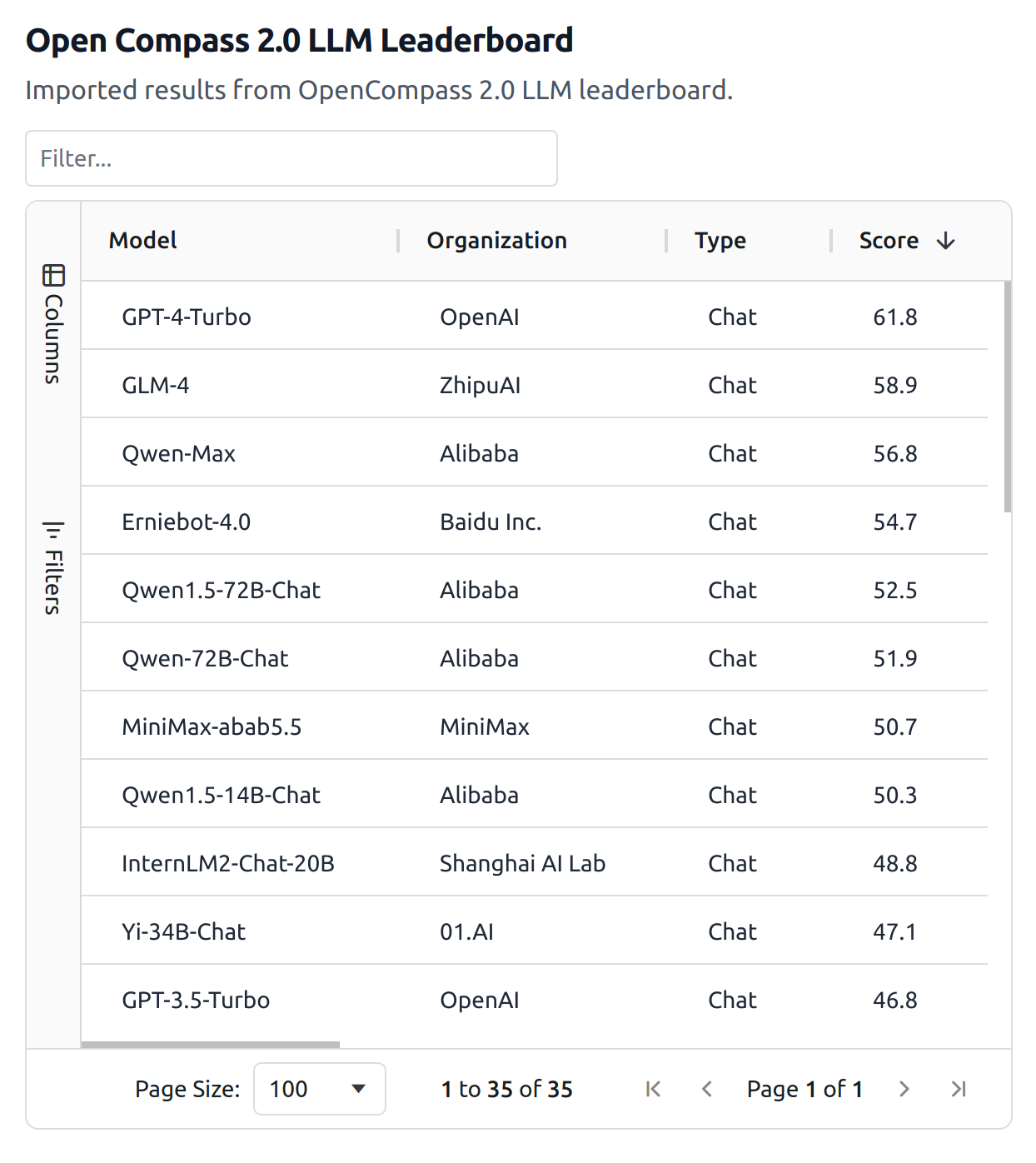

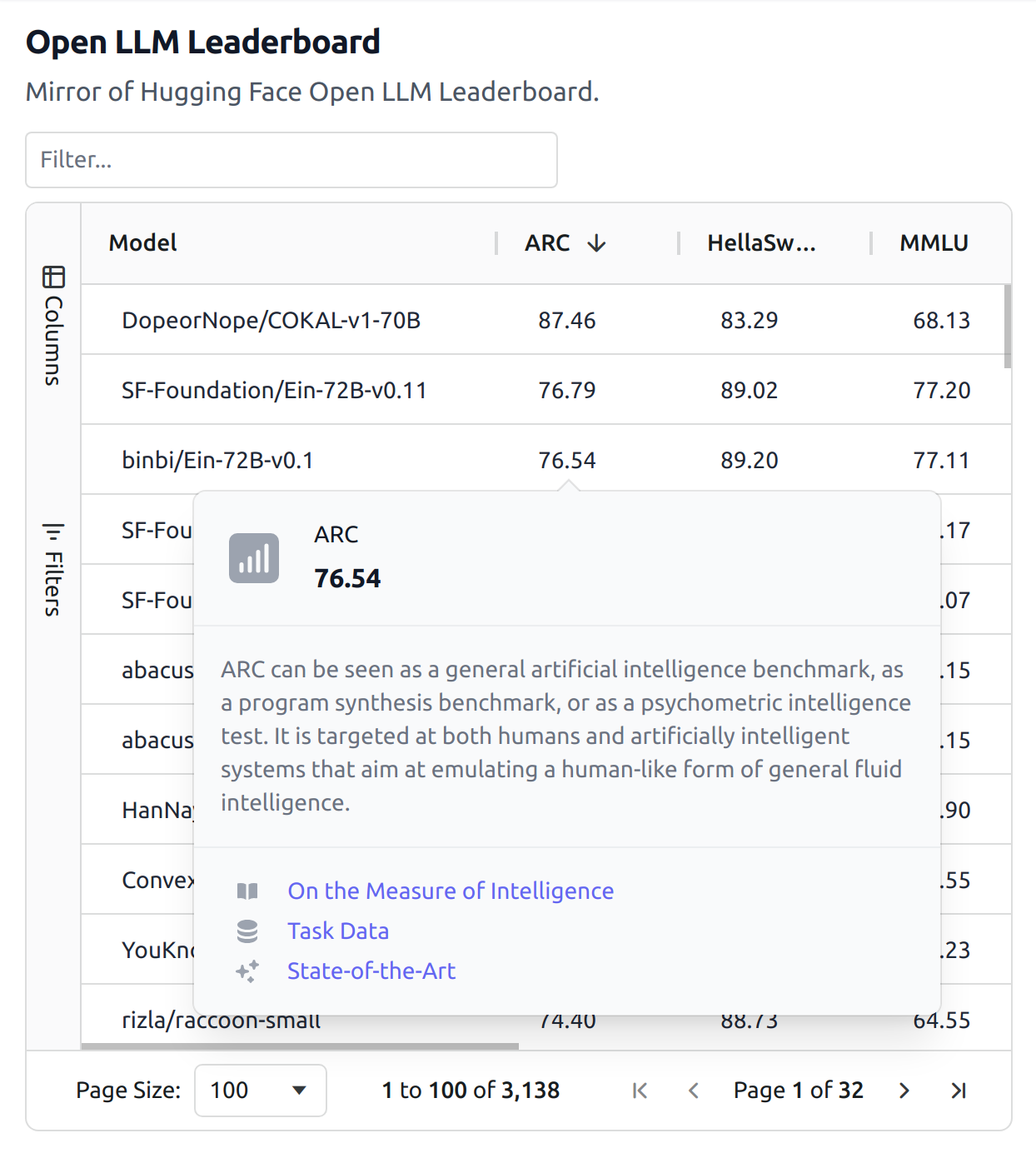

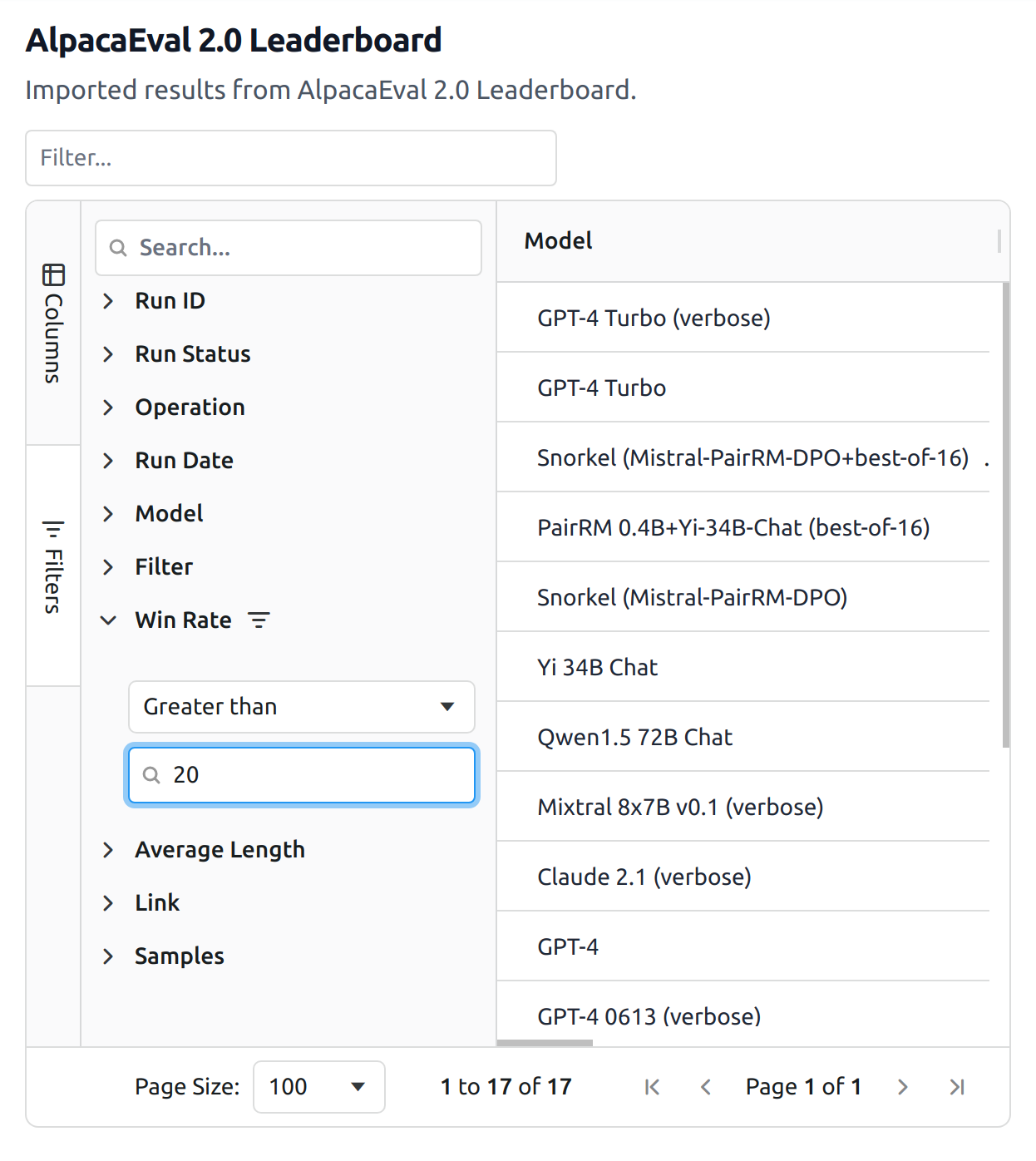

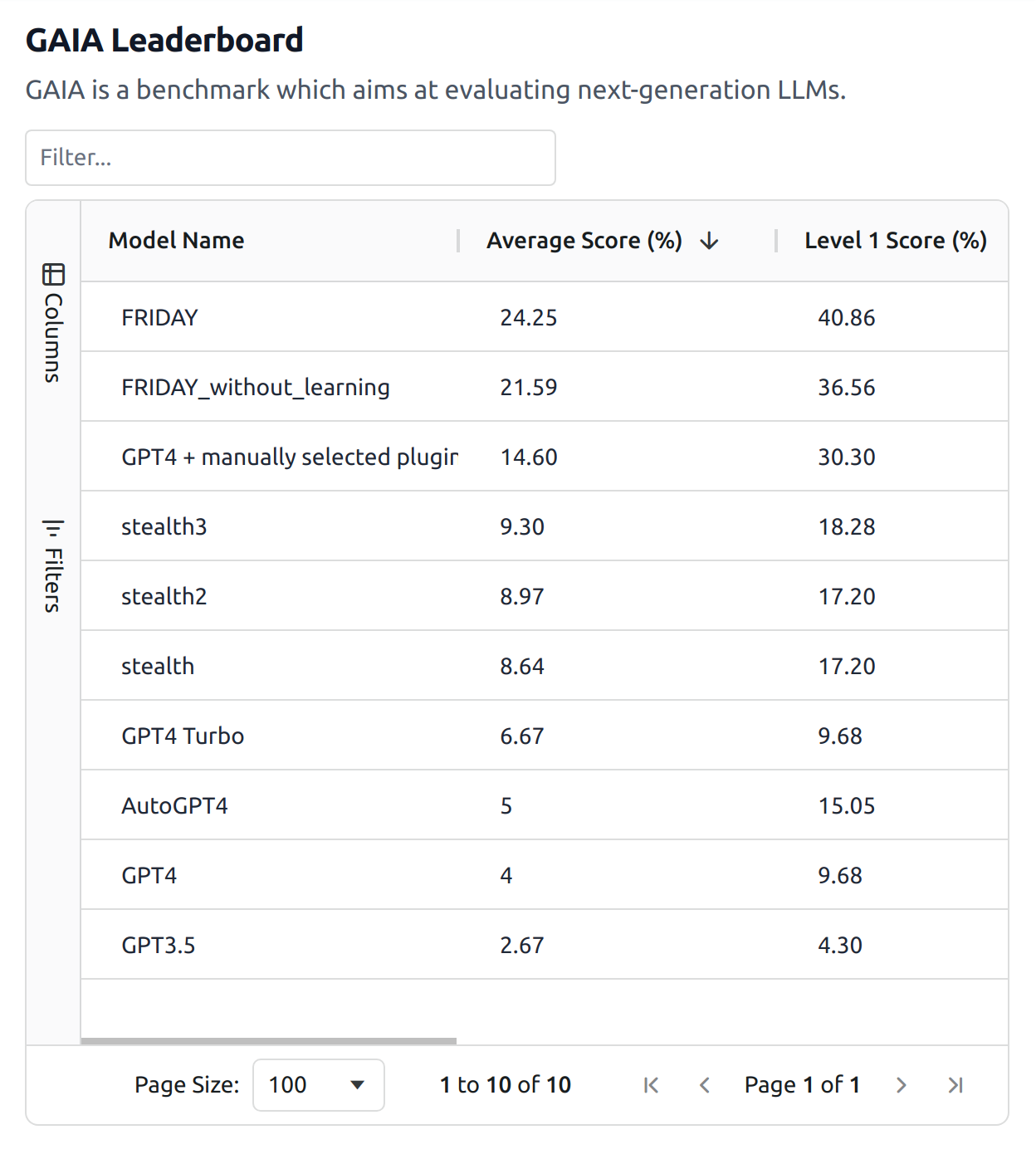

Publish results to Gage public or private leaderboards.

Compare and analyze results. Deepen your understanding.

Get input from team members and other experts on next steps.

Run any code for any framework to leverage the ecosystem of experts.

Publish evaluations in second to Gage Live leaderboards.

Gage was founded by the team behind Guild AI, a leading open source experiment tracking toolkit.

Gage has two parts. Gage Run is an open source toolkit for running evaluations and managing results. Gage Live is an online platform for publishing and exploring evaluation results.

Gage is currently in beta and under active development. If you'd like to contribute, request features, or otherwise stay up to date, join the Gage community and say hi!